A capability that simply did not exist when our nation was founded is now so omnipresent that it has impacted every industry, every discipline, and every aspect of our lives. Computing power has transformed society – making the impossible routine and epitomizing the proverb, “necessity is the mother of invention.”

Though its origins predate NNSA, computing technology is now inexorably linked to the Nuclear Security Enterprise, and the brilliant minds at our National Laboratories will undoubtedly shape its future.

The first time mass data collection and analysis were truly demanded in the United States was for the census. Our forebears attempted to record our young nation’s total population in 1790 – with delayed and faulty results.

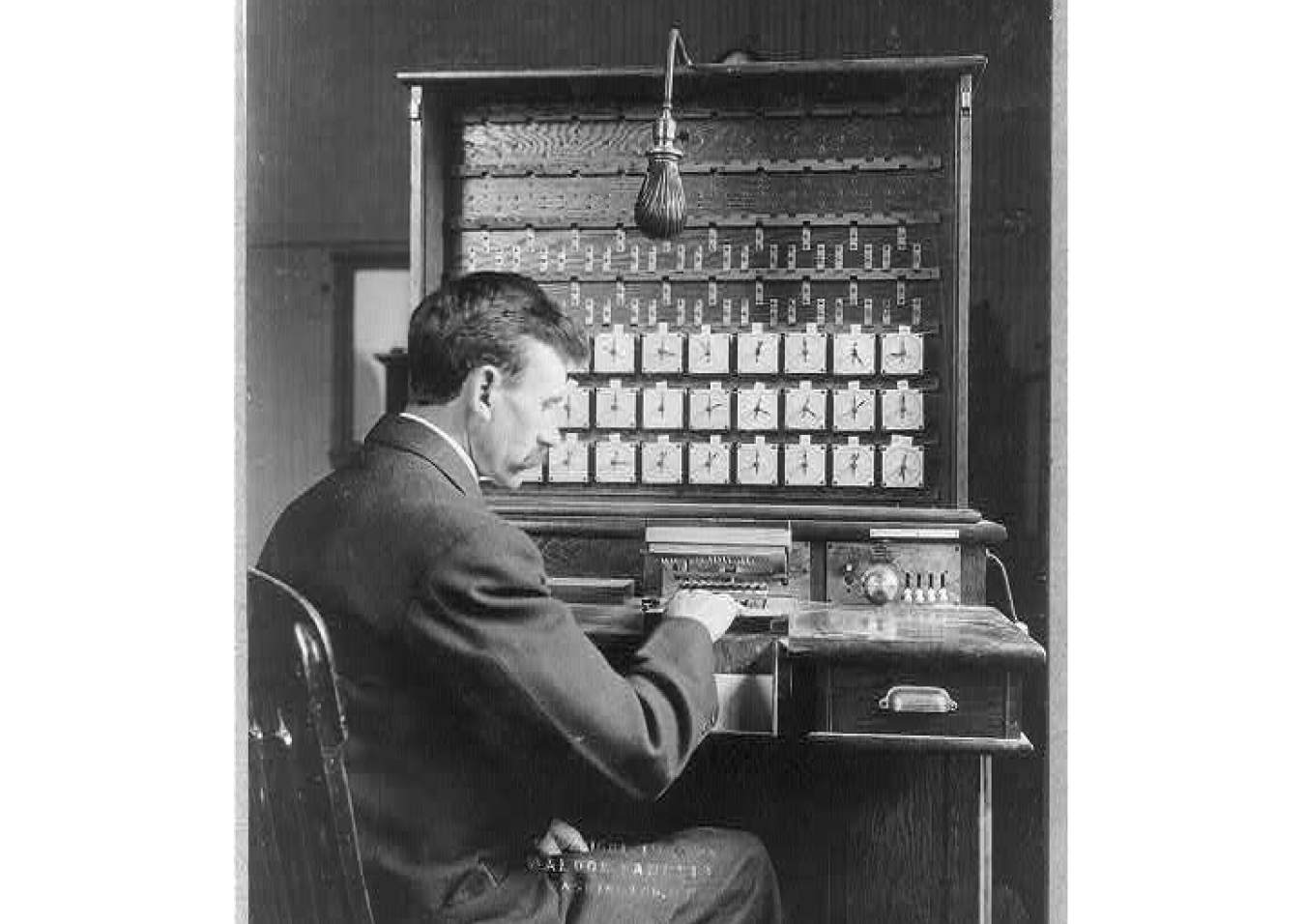

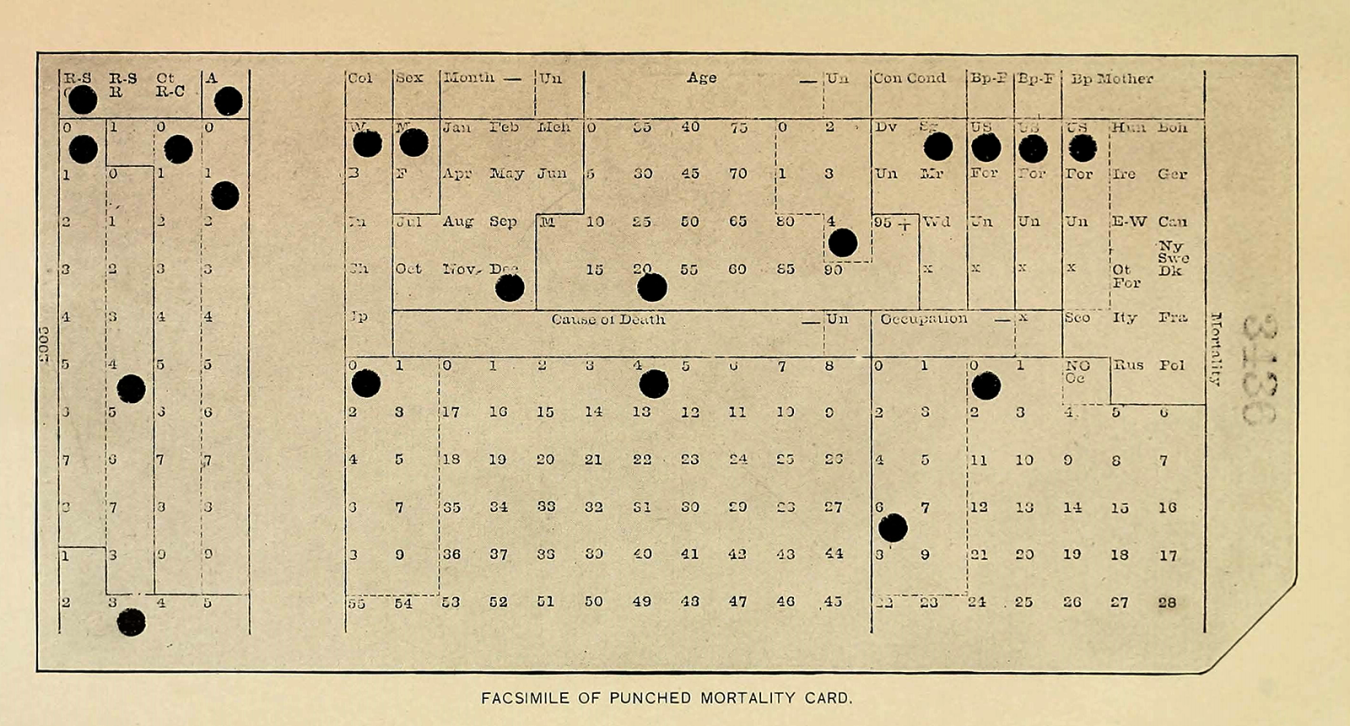

It was 100 years before a clever Census Bureau employee devised a solution. Herman Hollerith’s electronic tabulating machine used punch cards and was modeled after an automatic weaving machine. It efficiently and accurately automated the process of collecting and recording data. Hollerith went on to found the Tabulating Machine Company, which is known today as IBM.

Punch cards are made of thick paper with a grid pattern. Fields within the grid are punched out to represent a specific value. When the card is fed into a reader, metal pins pass through the holes in the card and complete a circuit on the other side. This revolutionary system was used in the 1890 census to determine the U.S. population in mere weeks, as opposed to years.

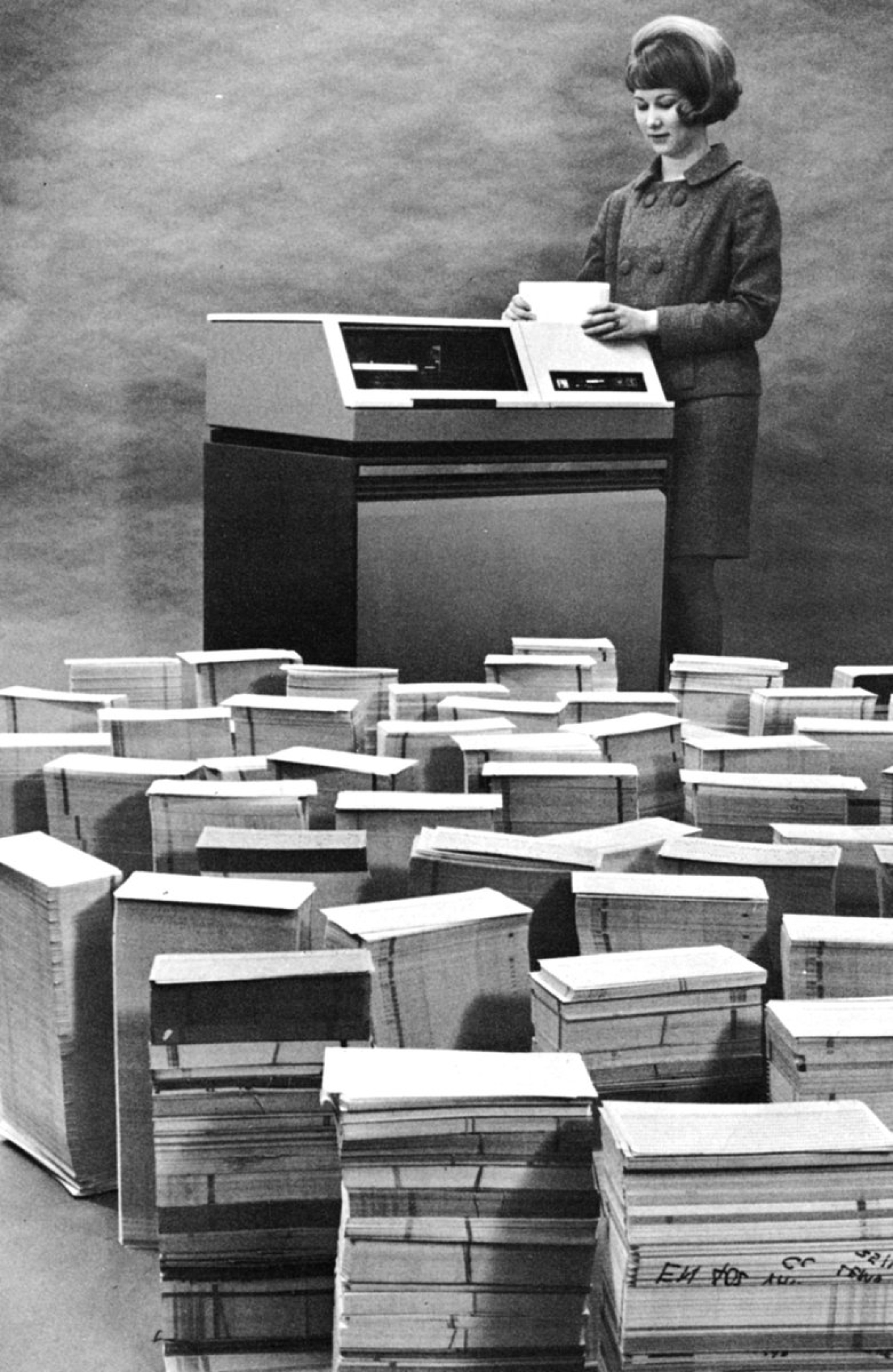

In 1964, the Kansas City National Security Campus installed a brand-new disk drive that could store 95,000 punch cards worth of data, or approximately 7.6 megabytes. This was cutting-edge technology at the time.

Technology has come a long way. Most modern servers allow 25 megabyte files to be sent as a single email attachment. Storage capacity and processing power have since grown by leaps and bounds, and NNSA has kept pace with the advent of high-performance computing, or supercomputing.

Computing power is now measured in floating point operations per second (FLOPS). This simply means calculations in which the decimal point “floats” depending on the unit.

The fastest supercomputers in the world operate at the petascale – a quadrillion (1,000,000,000,000,000 or 1015) calculations each second. Exascale computing is the next frontier for digital might – systems capable of a quintillion (1018) calculations each second.

NNSA’s Advanced Simulation and Computing program was established to process massive amounts of data at our National Laboratories and make the stockpile stewardship program possible. Complex multi-physics, multi-scale simulations are used to analyze and predict the performance, safety, and reliability of nuclear weapons and to certify their functionality.

The Trinity supercomputer at Los Alamos National Laboratory

The Trinity supercomputer at Los Alamos National Laboratory occupies over 5,000 square feet and boasts a peak performance of 41.5 petaFLOPS. It also has a memory capacity of nearly 2 petabytes – or about 2,000,000,000 megabytes. That’s a lot of punch cards! In addition to supporting our nuclear deterrent, the computing power of Trinity has predicted the spread of HIV, tackled antibiotic-resistant bacteria, and pushed the boundaries of machine learning.

However, even this TOP 500 behemoth can’t keep up with the speed of NNSA’s newest supercomputer, Sierra. The system is housed at Lawrence Livermore National Laboratory and was built by IBM, which would doubtless make Herman Hollerith quite proud. Sierra currently ranks as the third fastest system on Earth. Its peak performance is an astounding 125 petaFLOPS.

Sierra is part of the CORAL partnership, a collaboration between Oak Ridge National Laboratory, Argonne National Laboratory, and Lawrence Livermore National Laboratory, established to leverage supercomputing investments, streamline procurement processes, and reduce costs.

NNSA’s Advanced Simulation and Computing program also funds Academic Alliances at universities across the nation in various “predictive science” activities. Researchers are attempting to accurately model complex physics experiments that would not be practical or even possible to perform in real life. Supercomputing capabilities have been put to work to help explain the mystery of dark matter, optimize the power grid, and even analyze genomic data to search for a cancer cure.

It is likely that the powerful potential of computing is still being fully realized. And though new challenges doubtless await, there will be National Lab experts using the best technology available—or inventing their own new technology—to find a solution.